Commentary: The Ethical Use of AI in the Security, Defense Industry

Artificial intelligence already surrounds us. The odds are that when you type an email or text, a grayed-out prediction appears ahead of what you have already typed.

The “suggested for you” portion of some web-based shopping applications predict items you may be interested in buying based on your purchasing history. Streaming music applications create playlists based on listening histories.

A device in your home can recognize a voice and answer questions, and your smartphone can unlock after recognizing your facial features. Artificial intelligence is also advancing rapidly within the security and defense industry. When AI converges with autonomy and robotics in a weapons system, we should ask ourselves, “From an ethical standpoint, where should we draw the line?”

Air Force pilot Col. John Boyd developed a decision-making model referred to as the OODA loop — observe, orient, decide, act — to explain how to increase the tempo of decisions to outpace, outmaneuver and ultimately defeat an adversary. The objective is to make decisions faster than an opponent, compelling them to react to actions and enabling a force to seize the initiative. But what if the adversary is a machine?

Humans can no longer outpace the processing power of a computer and haven’t been able to for quite a while. In other words, a machine’s OODA loop is faster. In some instances, it now makes sense to relegate certain decisions to a machine or let the machine recommend a decision to a human.

From an ethical viewpoint, where should we allow a weapon system to decide an action and where should the human be involved?

In February 2020, the Defense Department formally adopted five principles of artificial intelligence ethics as a framework to design, develop, deploy and use AI in the military. To summarize, the department stated that AI will be responsible, equitable, traceable, reliable and governable.

It is an outstanding first step to guide future developments of AI; however, as with many foundational documents, it is lacking in detail. An article from DoD News titled, “DoD Adopts 5 Principles of Artificial Intelligence Ethics,” states that personnel will exercise “appropriate levels of judgment and care while remaining responsible for the development, deployment and use of AI capabilities.”

Perhaps the appropriate level of judgment depends on the maturity of the technology and the action being performed.

Machines are very good at narrow AI, which refers to the accomplishment of a single task such as those previously mentioned. Artificial general intelligence, or AGI, refers to intelligence that resembles a human’s ability to use multiple sensory inputs to conduct complex tasks.

There is a long way to go before machines reach that level of complexity. While achieving that milestone may be impossible, many leading AI experts believe that it is in the future.

AIMultiple, a technology industry analyst firm, published an article that compiled four surveys of 995 leading AI experts going back to 2009.

In each survey, a majority of respondents believed that researchers would achieve AGI on average by the year 2060. Artificial general intelligence is not inevitable though and technologists historically tend to be overly optimistic when making predictions concerning AI. This unpredictability, however, reinforces why one should consider the ethical implications of AI in weapon systems now.

One of those ethical concerns are “lethal autonomous weapon systems,” which can autonomously sense the environment, identify a target, and make the determination to engage without human input. These weapons have existed in various capacities for decades but nearly all of them have been defensive in nature such as the most simplistic form — a landmine — ranging up to complex systems such as the Navy’s Phalanx Close-in Weapon System.

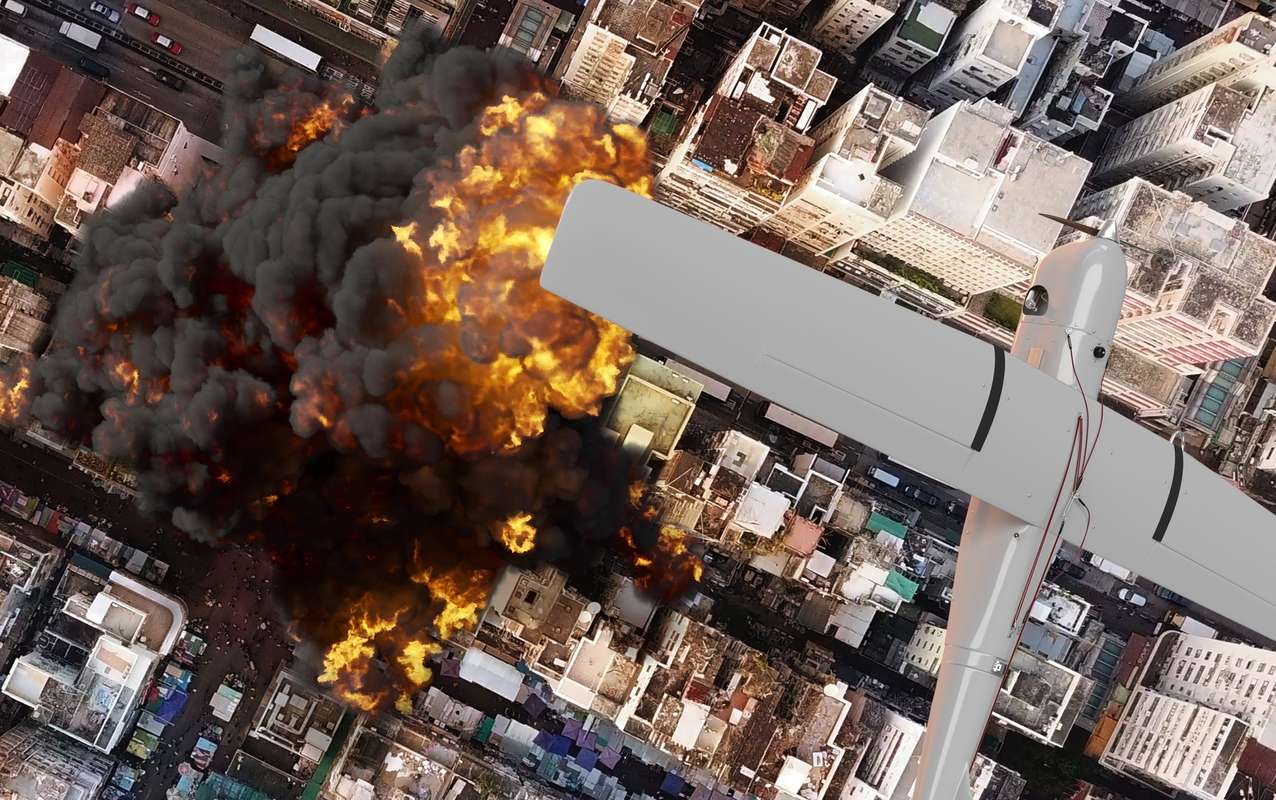

But in March 2020 in Libya, the first case of an offensive lethal autonomous weapon emerged when a Turkish-built Kargu-2 unmanned aerial system is suspected to have engaged human targets autonomously with a weapon.

That should be a concern for all because current technology for offensive weapons isn’t mature enough to autonomously identify people and determine hostile intent.

The most difficult portion of the kill chain to automate is the positive identification of a target known as “autonomous target recognition.” Object recognition is performed by machine learning or deep learning through a convolutional neural network. Some instances of object recognition using computer vision, such as identifying military weapons like a tank or artillery piece, can be performed today. But even then, environmental challenges such as obscuration of the target, smoke, rain and fog may reduce accuracy.

Methods also exist to spoof or trick computer vision into misrecognizing an object known as a typographic attack or an adversarial image.

One such recent example demonstrated that computer vision was tricked by placing a sticky note on an object with text describing a different object. As thorny as that problem is, the challenge of identifying an individual person and their intent is even more difficult for a machine.

“Signature drone strikes” are those engagements that occur based on a target’s pattern of life combined with other sources of intelligence. Signature strikes are conducted by teams of highly trained professionals providing context and evaluating intent of the target, but even then, they are controversial and certainly not infallible as witnessed by the drone strike in Kabul in late August. Imagine if signature strikes occurred without a human in the loop. That’s what an offensive autonomous weapon system would do.

Even if we solved all the problems of object and people recognition, there is still another important missing element where humans outperform machines: context. Within the rules of engagement, we seek to satisfy three questions before engaging a target. Legally can we engage a target? Ethically should we engage the target? And morally how should we engage it? With narrow AI in weapon systems, we may be able to answer the legal question a portion of the time, but we will never be able to satisfy the ethical and moral questions with a machine.

One example of where autonomous target recognition may fail is a scenario of a child holding a weapon or even a toy weapon. Computer vision may enable a machine to accurately identify the child as a human with a weapon and legally, if permitted within the rules of engagement, that may be enough justification to authorize a strike against that target. However, a human observing this same individual would be able to discern that it is a child and ethically would not authorize an engagement. How would a machine make the distinction between a child holding a realistic looking toy gun and a short adult holding a rifle?

Situations like this and myriad others require a human’s judgment instead of a machine’s obedience to programmed commands.

So where is AI best implemented in weapon systems? In areas where it aids a human to make a faster, more informed decision, where it reduces the workload of task saturating functions of a human operator, and situations where a human is too slow to react to the threat. Two prime examples reside in products that employ phased-array radars to use artificial intelligence at the edge to analyze flight characteristics of objects detected to eliminate false positive targets, such as birds. Autonomous drones that hunt down other drones and capture them with a net, can also use AI to determine the best positioning and timing of when to fire the net based on the target’s velocity and position.

These systems are defensive in nature, reduce operator workload, and speed up the OODA loop to seize the initiative.

Where should the line be drawn when it comes to AI in weapon systems? Is it the point where the decision to take another human life is delegated to a machine without any human input? That is the current stance within the Defense Department. However, adversaries may not share the same ethical concerns when implementing artificial intelligence into their weapons.

It’s currently easy to say that a human will always be involved in the kill chain, but how long can the United States maintain that position when it comes at a disadvantage to adversaries’ OODA loop? Will the U.S. military one day be forced to choose between allowing a machine to kill a human or losing its competitive advantage?

Opponents of maintaining a human in the kill chain will also state that humans make mistakes in war and that a machine is never tired, stressed, scared, or distracted. If a machine makes fewer mistakes than a human resulting in less collateral damage, isn’t there a moral imperative to minimize suffering by using AI?

While competitive advantages and reduced collateral damage are valid arguments for allowing a machine to decide to kill a human in combat, war is a human endeavor and there must be a human cost to undertaking it. It must always be difficult to kill another human being, lest we risk losing what makes us human to begin with. That is where we should take a firm ethical stance when considering AI in future weapons.

Related posts